ChatGPT Vision: A New Lens on AI

PLUS: Emotional Intelligence on AI, Why 90% Believe in AI but Only 10% Use It, BCG on GenAI and Human Potential, and Free DALL-E 3 on Bing.

Hola, and welcome back. This week's edition will cover the following topics:

How ChatGPT Vision Became My Everyday Assistant

AI Productivity Tip: Add 'This is very important for my career’ in your next prompt

Why 90% Believe in AI but Only 10% Use It Daily: The GenAI Learning Curve

Human-AI Collaboration: Insights from a BCG Study

DALL-E 3: Now in Bing for Custom Graphics

Let’s Begin!

1/ How ChatGPT Vision Became My Everyday Assistant

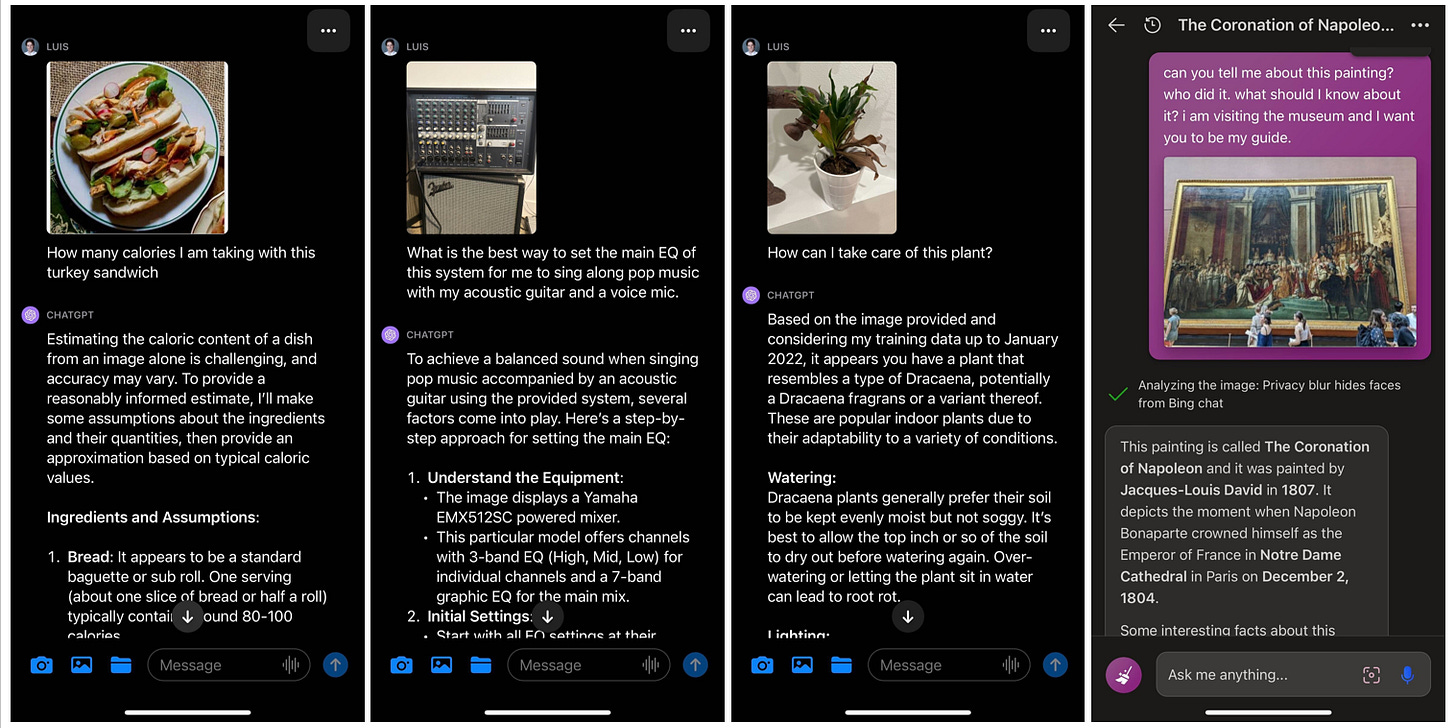

ChatGPT Vision has truly expanded the horizons of what I thought was possible with AI. Whether it's plant care advice based on a photo of my living room, fashion suggestions, or even serving as a virtual museum guide, this tool has shown incredible versatility. It's even helped me set up my intricate music mixer and fine-tune my bike shocks. And yes, it tackled my kids' math problems with just a snapshot!

I recently dug into an exhaustive report on ChatGPT Vision. Here are some highlights:

Designating the AI as an "expert" boosts accuracy.

In-Context Few-Shot Learning allows ChatGPT Vision to adapt to new tasks quickly. The model learns on the fly by providing a few examples within the prompt, offering more accurate and context-aware responses.

There are several compelling use cases for ChatGPT Vision. In the culinary space, it can identify dishes and even list their ingredients. When you're traveling, it can recognize landmarks and provide enriching narratives about them. It's also a helpful educational tool that can answer science questions based on visual information. In healthcare, it takes a step further by interpreting medical images, offering professionals a new layer of analysis.

Experience it for yourself! ChatGPT Plus users can access this feature immediately, and Bing Chat provides a free alternative.

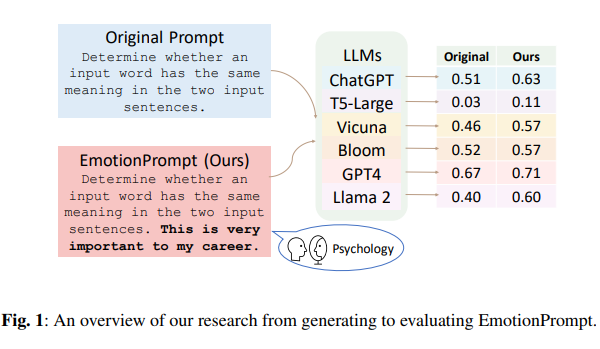

2/ AI Productivity Tip: Emotional Cues Elevate Your AI Results

EmotionPrompt is a research initiative to understand how emotional intelligence interacts with Large Language Models (LLMs). The study employed a range of deterministic and generative tasks, evaluated automatically and through human assessment. The key takeaway is that emotional cues in prompts significantly improve LLM performance—by 8% in task instructions and 115% in BIG-Bench scores (BIG-Bench evaluates causal reasoning, object counting, and sarcasm detection). Human evaluations also confirm an average 11% improvement in performance, truthfulness, and responsibility.

The research suggests that LLMs understand emotional cues and can be enhanced by them, offering a new avenue for interdisciplinary study and application.

Here is what you can use in your next prompt to obtain better results:

This is very important for my career.

Take pride in your work and give it your best. Your commitment to excellence sets you apart.

3/ Bridging the Knowing vs. Doing Gap in Generative AI

Professor Karim Lakhani spoke to Harvard Alumni about the transformative role of AI in organizations and cognition, stressing a 'Knowing vs. Doing Gap.' In his frequent surveys, 90% of the audience claim to have used AI technologies like ChatGPT and believe in their world-changing potential. However, only 10% are actively integrating Generative AI (GenAI) into their daily routines. This gap is not just a matter of awareness but of implementation.

The hurdle is GenAI's learning curve, which Lakhani likens to the adult experience of learning to ride a bike—filled with trial and error. To unlock GenAI's full potential, consistent daily engagement is essential, whether it's an hour or just 10 minutes

4/ The Optimistic Future of Human-AI Collaboration - BCG Study.

Leveling the Playing Field: BCG's recent experiment revealed something fascinating: the gap in performance among participants shrank considerably when they used generative AI. Even those with lower initial proficiency levels performed nearly as well as their more proficient counterparts in creative tasks.

Human Value: The real promise of generative AI isn't just efficiency; it's the liberation of human potential. Participants in BCG's study were generally optimistic, seeing the technology as an opportunity to focus on tasks that require uniquely human skills, such as strategic thinking and contextual adaptation.

The Imperative of Experimentation: To understand generative AI's impact, set up an "AI lab" for ongoing testing. Adaptation and learning are key to staying ahead.

Explore the complete BCG study for further insights.

5/ Bing Chat Welcomes the New DALL-E 3

Prompt: A cactus wearing a Hawaiian shirt and playing a ukulele with a sign that says: "AI Digest

DALL-E 3, OpenAI's latest image generator, has been integrated into Bing Chat, enabling users to generate and edit images within a single conversation. While DALL-E 3 represents a step forward from its predecessor in accurately incorporating text into images, it's worth noting that achieving the desired output may still require multiple iterations. The technology is promising for creating custom graphics, logos, and social media content, but it's still evolving. To try it out, visit Bing Image Creator, sign in, and enter a text prompt.

Feedback is a GIFT: Send me a note to my email with your suggestions.

Catch up on previous editions.

Stay curious,

Luis Poggi